Graph neural networks in vision-language image understanding: a survey

Published in The Visual Computer, 2024

Abstract

2D image understanding is a complex problem within computer vision, but it holds the key to providing human-level scene comprehension. It goes further than identifying the objects in an image, and instead, it attempts to understand the scene. Solutions to this problem form the underpinning of a range of tasks, including image captioning, visual question answering (VQA), and image retrieval. Graphs provide a natural way to represent the relational arrangement between objects in an image, and thus, in recent years graph neural networks (GNNs) have become a standard component of many 2D image understanding pipelines, becoming a core architectural component, especially in the VQA group of tasks. In this survey, we review this rapidly evolving field and we provide a taxonomy of graph types used in 2D image understanding approaches, a comprehensive list of the GNN models used in this domain, and a roadmap of future potential developments. To the best of our knowledge, this is the first comprehensive survey that covers image captioning, visual question answering, and image retrieval techniques that focus on using GNNs as the main part of their architecture.

Citation

@article{senior2024graph,

title={Graph neural networks in vision-language image understanding: A survey},

author={Senior, Henry and Slabaugh, Gregory and Yuan, Shanxin and Rossi, Luca},

journal={The Visual Computer},

pages={1--26},

year={2024},

publisher={Springer}

}

Key Takeaway

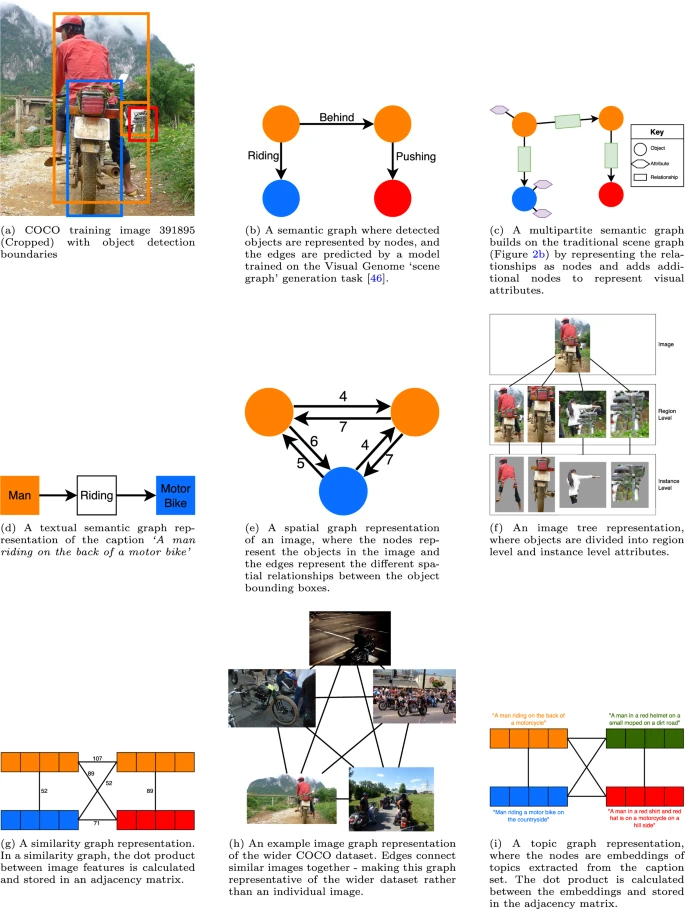

We present a taxonomy of the different graph types used in Vision-Language tasks. A visual representaiton of these graph structures is shown in Figure 2 of our paper.

Citation: Senior, H., Slabaugh, G., Yuan, S., & Rossi, L. (2024). Graph neural networks in vision-language image understanding: A survey. The Visual Computer, 1-26. https://link.springer.com/article/10.1007/s00371-024-03343-0